From generating human-like conversations and photorealistic images to writing code, music, and even news articles, generative AI models—like GPT-4.5, DALL·E, and others—have captured the world’s attention. These systems, trained on vast datasets to create content that mimics human output, are rapidly transforming industries and workflows. But with great capability comes a pressing question: how do we ensure these models are used ethically?

As AI becomes more embedded in daily life, the ethical considerations surrounding its development and deployment have shifted from academic discussion to urgent societal debate. Generative models, in particular, bring new challenges that demand careful scrutiny, transparency, and regulation.

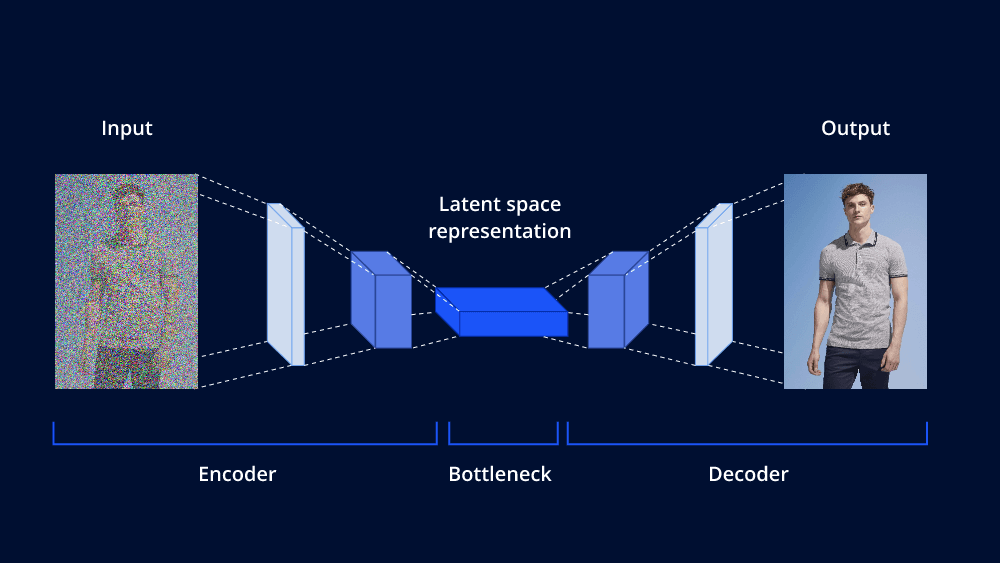

What Are Generative Models?

Generative models are a class of AI systems designed to create new content—text, images, audio, or video—based on patterns learned from existing data. Unlike traditional AI, which focuses on classification or prediction, generative models output something entirely new.

Famous examples include:

- GPT-4.5 – generates human-like text for a wide range of applications

- DALL·E – creates original images from textual prompts

- Codex / Copilot – writes programming code

- Sora (by OpenAI) – generates video content from text descriptions

These tools are powerful, democratizing access to creative tools and automating complex tasks. But their very ability to mimic human expression and judgment raises serious ethical questions.

Key Ethical Concerns in the Age of Generative AI

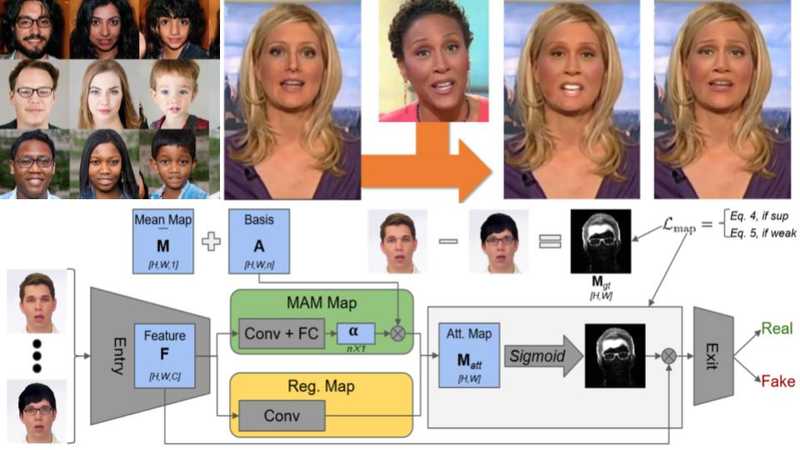

1. Misinformation and Deepfakes

Generative models can produce highly convincing fake content—articles, videos, voice recordings—that are difficult to distinguish from real ones. This has implications for:

- Political manipulation (e.g., deepfake videos of world leaders)

- Fake news and propaganda at unprecedented scale

- Scams and impersonation (e.g., mimicking someone’s voice or writing style)

Without effective detection mechanisms or content provenance systems, the line between truth and fabrication becomes dangerously blurry.

2. Bias and Discrimination

AI models are trained on human-generated data, which reflects existing social biases. As a result, generative models can reproduce and amplify harmful stereotypes related to race, gender, religion, or class.

Examples include:

- Image models generating biased depictions of certain professions

- Language models reflecting toxic internet discourse

- Biased hiring or medical advice if AI is used uncritically

Addressing these biases requires not only better data and fine-tuning, but also deeper reflection on whose perspectives are included—or excluded—in training datasets.

3. Intellectual Property and Creative Ownership

When a generative model produces a painting in the style of Van Gogh, or writes a poem that mirrors the tone of a famous author, who owns the result?

Key IP-related dilemmas:

- Do creators whose works trained the AI deserve compensation?

- Can companies copyright AI-generated work?

- What rights do users have over content they prompt into existence?

Legal systems worldwide are scrambling to adapt, but the boundaries of authorship and ownership remain unsettled.

4. Labor Displacement and Economic Impact

As generative AI takes on more tasks in writing, design, customer support, and software development, concerns grow about job loss and economic inequality.

Industries potentially affected include:

- Journalism and content creation

- Graphic design and animation

- Legal and administrative work

- Software engineering

While AI can augment human productivity, there’s a risk that companies may prioritize automation without investing in retraining or creating new human-centric roles.

5. Consent and Data Privacy

Much of what generative models learn comes from scraping public data—often without the knowledge or consent of its creators. This includes:

- Social media posts

- Forum comments

- Public code repositories

- News articles and artwork

This raises major questions:

- Should creators have a right to opt out of training datasets?

- Can individuals demand that their likeness or voice not be replicated?

- What happens when models memorize and regurgitate private data?

Several lawsuits (e.g., from artists and writers) are already challenging the legality of current training practices.

Building Ethical Generative AI: Principles and Practices

Creating responsible AI isn’t just about patching problems after the fact—it requires ethical thinking at every stage of development. Here are key pillars for building generative AI ethically:

1. Transparency

- Disclose what data was used to train the model

- Clearly mark AI-generated content

- Provide users with information about how models work and what limitations they have

2. Accountability

- Establish clear lines of responsibility when AI causes harm

- Enable mechanisms for appeal or redress if people are misrepresented, impersonated, or negatively impacted by AI

3. Fairness

- Audit datasets and outputs for demographic bias

- Include diverse voices in training data and development teams

- Regularly evaluate performance across different populations

4. User Agency

- Give users control over how their data is used

- Allow opt-outs from data collection or model training

- Enable humans to override or fact-check AI-generated content

5. Guardrails and Safeguards

- Apply content filters to block dangerous or illegal output (e.g., hate speech, weapons design)

- Limit the ability to generate deceptive or harmful material

- Use reinforcement learning from human feedback (RLHF) to align models with human values

Regulatory Efforts: A Global Response

Governments are beginning to step in, recognizing the societal implications of generative AI.

Examples include:

- EU AI Act – a risk-based framework regulating AI systems, including transparency rules for generative models

- US Executive Orders – calling for safety, fairness, and rights protection in AI systems

- China’s Generative AI Guidelines – emphasizing national security, content censorship, and model registration

While regulation lags behind the pace of innovation, a consensus is emerging: generative AI needs clear, enforceable norms to ensure it serves the public good.

The Human Side: Rethinking Creativity and Connection

Generative AI challenges how we think about creativity, originality, and human identity. When machines can write poetry, compose music, and design products—what role does the human creator play?

Ethics in this age isn’t just about rules; it’s about values:

- How do we preserve human meaning in a world of synthetic content?

- How do we teach the next generation to critically evaluate what they see and hear?

- How do we ensure that AI enhances—not erodes—empathy, dignity, and truth?

These are not questions for technologists alone. Artists, educators, policymakers, and communities all have a role to play in shaping the cultural norms around generative AI.

Conclusion

Generative AI has unlocked extraordinary capabilities—but with them come profound ethical challenges. As these models reshape media, communication, and creativity, we are confronted with choices that will define the digital age.

The question is no longer can we build intelligent machines that generate content—it’s should we, how, and for whom. If we want AI to be a force for good, we must lead with principles: transparency, fairness, accountability, and above all, a deep respect for human values.

Ethics in AI isn’t a checkbox—it’s the foundation of trust, innovation, and a future where technology truly serves humanity.